Data Archiving: The differentiator of ERP services

Effective and efficient are the cornerstones of modern ERP (Enterprise Resource Planning) solutions. Today’s ERP vendors must address the compounding pressure of ever-expanding data. In other words, they need to cue in robust data archiving solutions. But, archiving ERP applications can get tricky at times. Many companies seek to retire those initial ERP applications – and, in some cases, the mainframe apps and proprietary platforms that preceded them – they’re not sure how to transition gracefully while maintaining access to all their data. This data still has value, not to mention liability potential, so archiving it takes careful thought. A skillful ERP solutions vendor can come to the rescue.

According to Fortune Business Insights, the global ERP software market will reach USD 93.34 billion in 2028 growing at a CAGR of 9.2% during the 2021-2028 period.

This implies booming ERP data growth in the upcoming years. The time is as important as ever to archive old data to make room for fresher incoming data. Efficient functioning can foster smoother ROI generation here.

What is data archiving?

Data archiving refers to a process of identifying, extracting, and transferring data that is no longer in active use, to a secure and accessible location. In the initial phases of building an ERP application, the focus is more on making accessible as much data as possible – in one system, under one roof. But, on the flip side of this lies the complex parent-child relationships between data tables. It can get difficult to pull data out of relational databases without breaking something. Your ERP solutions vendor needs to have tools in place for efficient data archival.

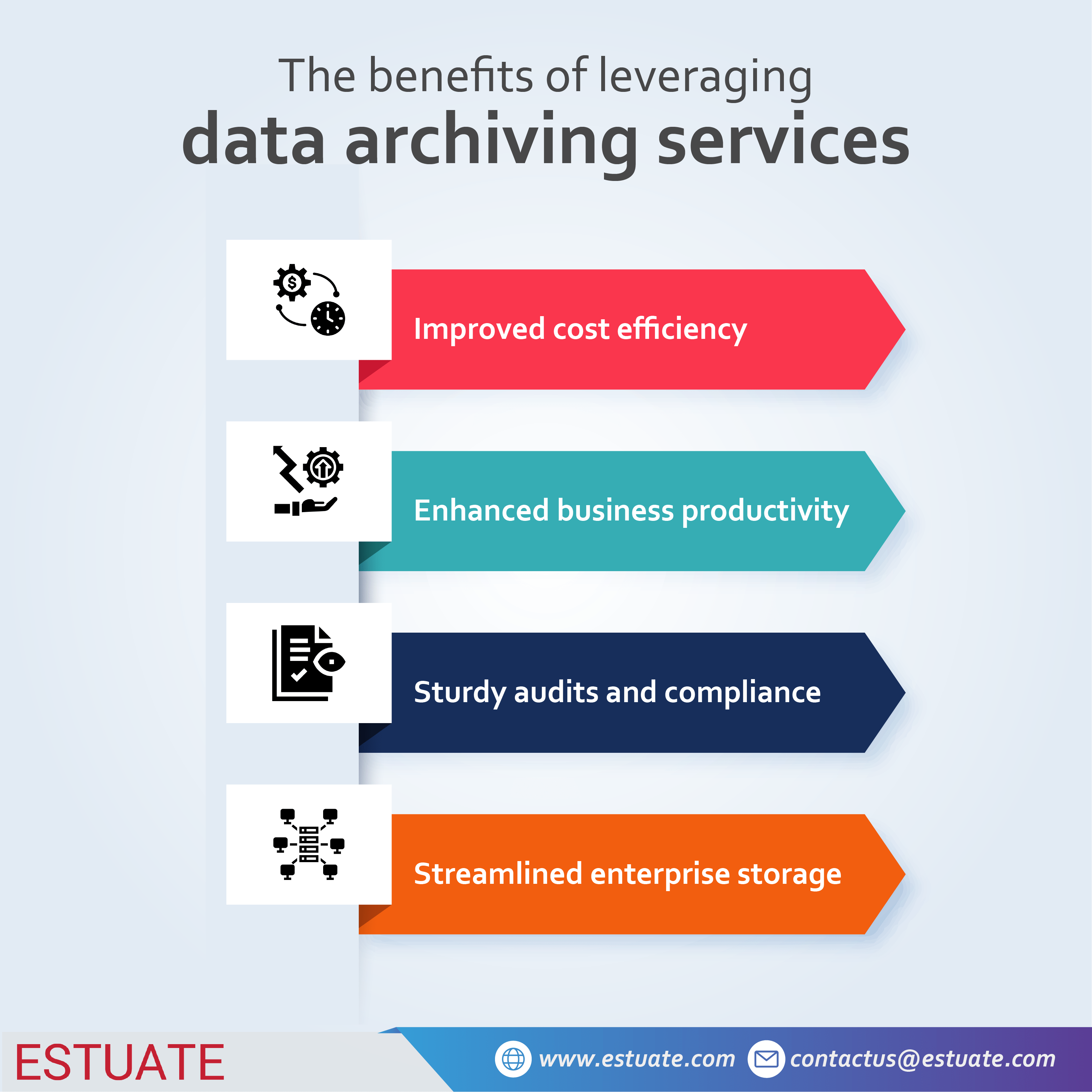

Why do you need data archiving solutions?

- Improves cost efficiency

- Enhances business productivity

- Supports audits and compliance

- Streamlines enterprise storage

1. Improves cost efficiency

In today’s data-overload environment, storage needs are at an all-time high. Without proper infrastructure, this can put unwarranted pressure on your servers, especially if you are storing everything on site. And, this further involves colossal investments for server upkeep. Many enterprise compliance standards demand that all corporate data (including ERP data) be stored for a substantial periods of time. So, when data deletion is not an option, it is always prudent to store data on remote/off-premise servers or in the cloud. And, this is what data archiving services are all about. In the absence of a well-maintained data repository, companies often have to outsource for intel discovery and incur huge costs in the process.

Data archiving services also take the load off your IT teams and make you more self-reliant when it comes to accessing the archived data. The archives are designed to be both affordable and accessible.

2. Enhances business productivity

Data archival solutions streamline ERP data end to end. It is record management of sorts. Business operation applications, such as ERP, often gather a lot of data in a very short period of time. It creates a lot of unnecessary pressure on your on-premise storage facilities. Cloud storage solutions are better suited for such requirements. All the data your software tools accumulate over a stipulated period of time can instead be archived for easy access and analysis. This not only saves on-premise service space but also ensures that all of your installations function quickly and efficiently.

By carefully structuring the collected data, the process of data archival also makes the case for easy data retrieval. All redundant data is removed and only the unique data is stored and saved. This further enhances business communication as all stakeholders access and collaborate on the same versions of the data files.

Data archival brings multiple advantages to businesses

3. Supports audits and compliance

Data governance and compliance are integral components of smooth enterprise operations. Businesses need to go through constant third-party audits and legal reviews. There is always some data that is not in active usage but still, you need to keep it handy for the said requirements. And, ERP data is also no exception. A sound ERP solution vendor has mechanisms in place to immediately identify and automatically archive such data. Application data masking and retirement are other data management solutions that can pave your way to strong compliance standards.

4. Streamlines enterprise storage

It’s always easier to gain insights from data when it’s stored in a single, shared location. If it is scattered across multiple devices and networks, it becomes difficult to leverage data as enterprise intel. To add to the challenge, data often comes in various shapes and sizes from varying sources – structured and unstructured, traditional and non-traditional. This, of course, means recurring efforts in server maintenance. A centralized repository of data (ERP or otherwise) with business-critical user access can make enterprise operations more efficient.

With data archiving solutions in place, storage can be streamlined and maintained in one go. You can plug in with select ERP service integrations for desirable outputs.

Why do many ERP vendors often miss out on data archiving?

If you’ve ever struggled with the process of purging, archiving, or extracting data for a mid-sized or large enterprise, you may have wondered aloud, “Why didn’t my ERP solutions vendor provide a better way to do this?”

In 1986, Oracle started developing a new generation of business applications that would transform the playing field. Up until that point, ERP systems had been proprietary. They were either written for IBM mainframes (as SAP’s accounting software suite was), or for one of the various mini-computer platforms.

Oracle’s new software would propel ERP one step closer to truly open systems. How? It was written for a new generation of hardware platforms—such as Sequent Computers and Pyramid Computers—that ran on a generic version of the UNIX operating system. Adding to the flexibility, the Oracle relational database could also run on proprietary platforms. Thus, companies using the new Oracle Financials suite with an Oracle database could run their software on virtually anything.

Suddenly, enterprises truly had a choice of hardware and software. Although IBM had invented the relational database, Oracle was running with it.

However, that wasn’t the only pro-customer change. The user interface in these new applications was vastly improved. Previous generations of ERP had offered users a traditional mainframe green screen. You would fill the screen with data and press a button. Since there was no field-level validation, errors would only come back to you after you’d processed an entire screen.

Oracle’s new apps, on the other hand, offered much richer interaction between computer and user. And because they ran on a relational database, users could query data on demand, rather than using the cumbersome nightly reporting associated with mainframe computing. These innovations naturally enabled much greater productivity—not to mention, user satisfaction.

The dynamics between data management and data archiving in ERP

The uptake of ERP applications has been dramatic over time. And the urge to load these systems with huge amounts of corporate data has been unstoppable. So, it was only a matter of time for businesses to realize that the aging ERP applications come with a more serious problem: it’s really hard to get data out of them. And fairly, this set the stage for corporate data repositories, ergo, data archives to enter the picture.

Estuate is the most trusted data archival partner of IBM InfoSphere Optim

At Estuate, we have deep experience in the implementation of IBM InfoSphere Optim solutions, and other IBM Unified Governance and Integration products. Together, we have more than 350 successful implementations.

Related read: Application archiving and retirement solutions with IBM InfoSphere Optim

With IBM InfoSphere Optim Archive solution, you can seamlessly archive little-used data and retire obsolete applications. The archived data can be accessed at any time.

What are you doing to make your subscription business model stand out? Do you think a subscription platform enabler can help? Let us know on LinkedIn, Twitter, or Facebook. We would love to hear from you!